DistributedDataParallel non-floating point dtype parameter with

4.6 (256) In stock

4.6 (256) In stock

🐛 Bug Using DistributedDataParallel on a model that has at-least one non-floating point dtype parameter with requires_grad=False with a WORLD_SIZE <= nGPUs/2 on the machine results in an error "Only Tensors of floating point dtype can re

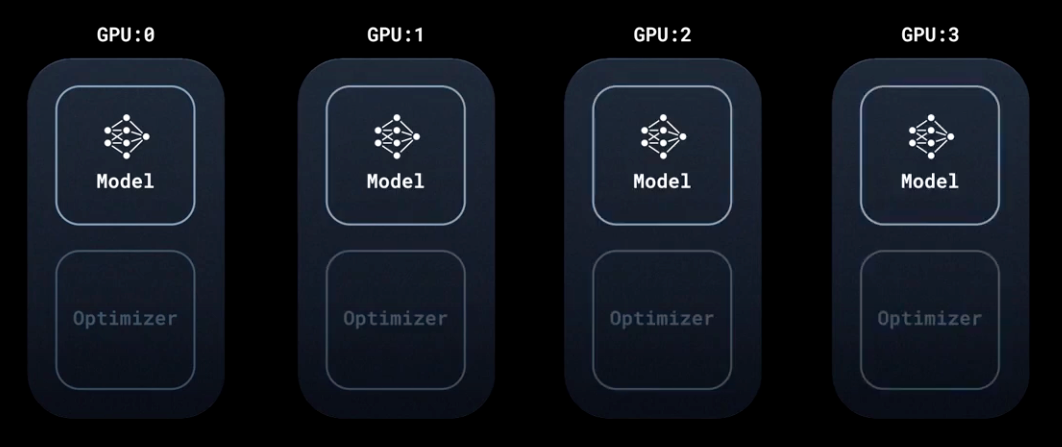

A comprehensive guide of Distributed Data Parallel (DDP), by François Porcher

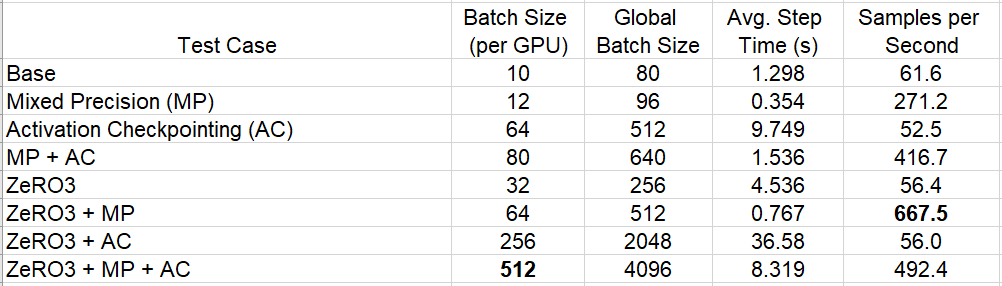

Optimizing model performance, Cibin John Joseph

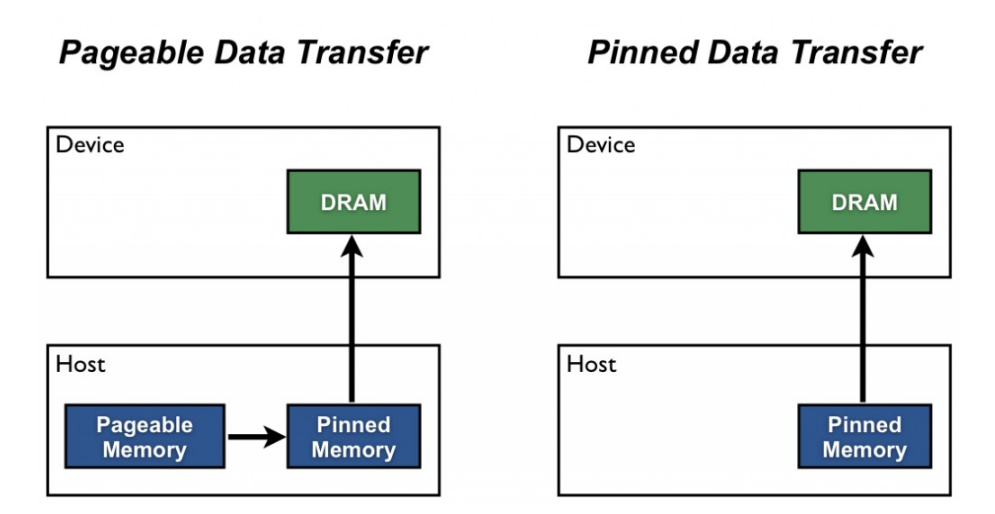

How to Increase Training Performance Through Memory Optimization, by Chaim Rand

Support DistributedDataParallel and DataParallel, and publish Python package · Issue #30 · InterDigitalInc/CompressAI · GitHub

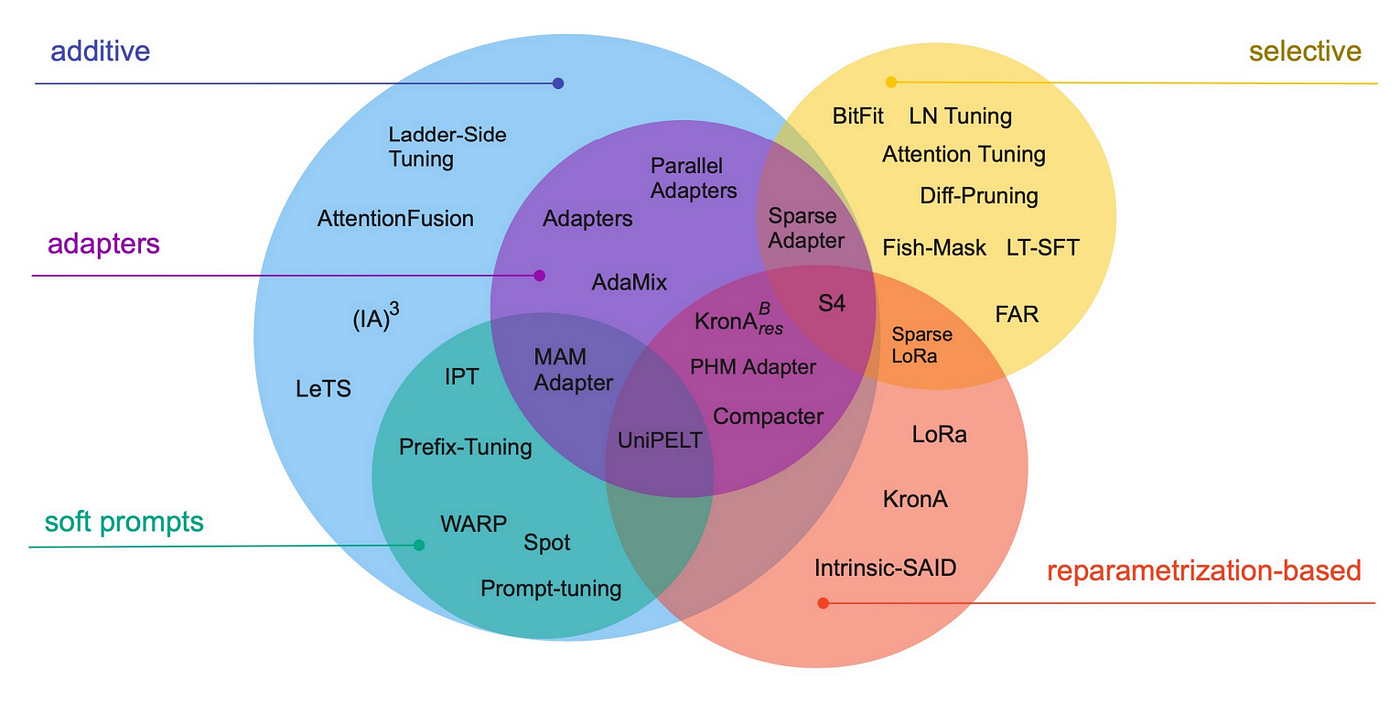

Finetune LLMs on your own consumer hardware using tools from PyTorch and Hugging Face ecosystem

Optimizing model performance, Cibin John Joseph

55.4 [Train.py] Designing the input and the output pipelines - EN - Deep Learning Bible - 4. Object Detection - Eng.

images.contentstack.io/v3/assets/blt71da4c740e00fa

change bucket_cap_mb in DistributedDataParallel and md5 of grad change · Issue #30509 · pytorch/pytorch · GitHub

/content/images/2022/10/amp.png

How to estimate the memory and computational power required for Deep Learning model - Quora

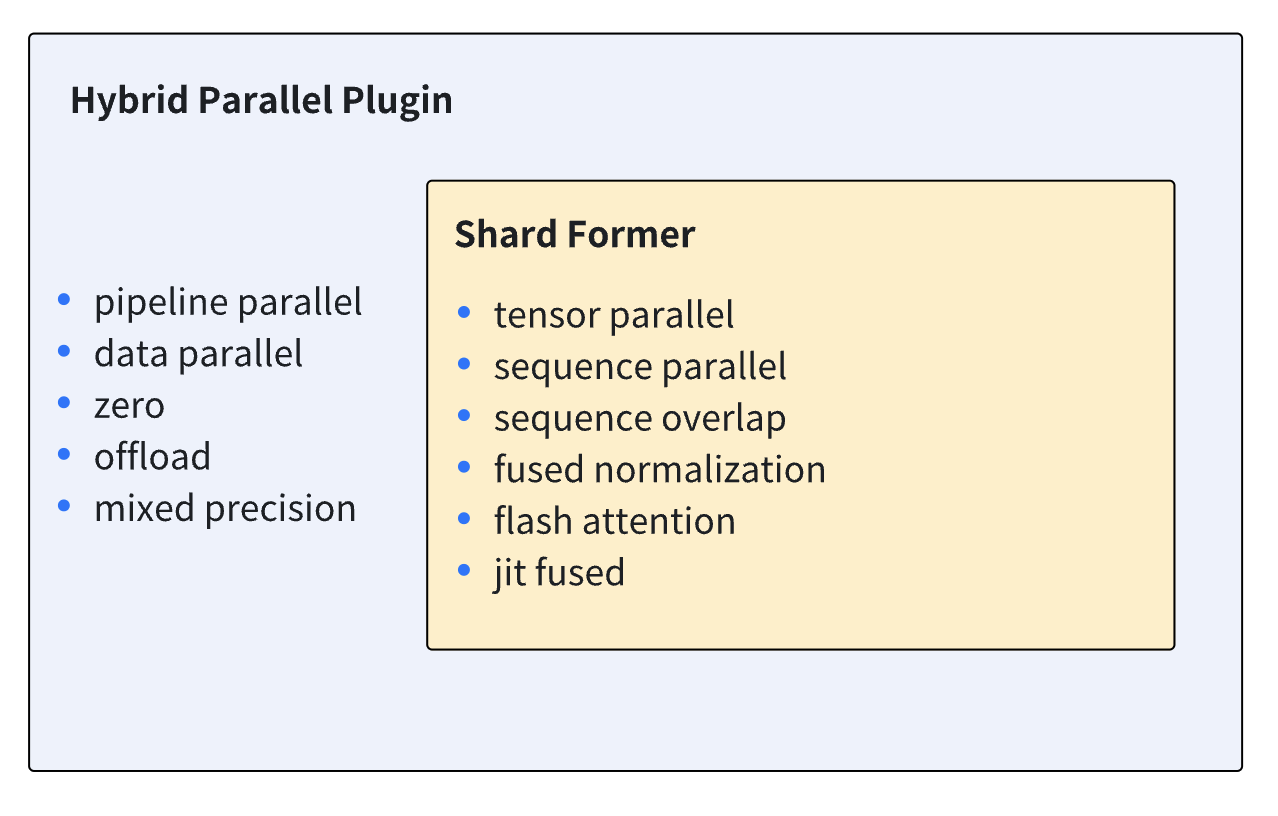

Booster Plugins

Configure Blocks with Fixed-Point Output - MATLAB & Simulink - MathWorks Nordic

beta) Dynamic Quantization on BERT — PyTorch Tutorials 2.2.1+cu121 documentation

A comprehensive guide of Distributed Data Parallel (DDP), by François Porcher