Iclr2020: Compression based bound for non-compressed network: unified generalization error analysis of large compressible deep neural network

4.7 (768) In stock

4.7 (768) In stock

Iclr2020: Compression based bound for non-compressed network: unified generalization error analysis of large compressible deep neural network - Download as a PDF or view online for free

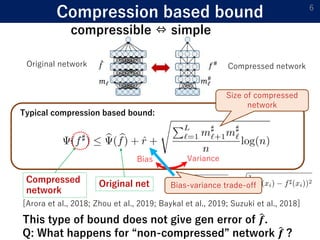

1) The document presents a new compression-based bound for analyzing the generalization error of large deep neural networks, even when the networks are not explicitly compressed.

2) It shows that if a trained network's weights and covariance matrices exhibit low-rank properties, then the network has a small intrinsic dimensionality and can be efficiently compressed.

3) This allows deriving a tighter generalization bound than existing approaches, providing insight into why overparameterized networks generalize well despite having more parameters than training examples.

ICLR 2020

PDF) MICIK: MIning Cross-Layer Inherent Similarity Knowledge for Deep Model Compression

Iclr2020: Compression based bound for non-compressed network: unified generalization error analysis of large compressible deep neural network

PDF] Compression based bound for non-compressed network: unified generalization error analysis of large compressible deep neural network

Iclr2020: Compression based bound for non-compressed network: unified generalization error analysis of large compressible deep neural network

ICLR: Compression based bound for non-compressed network: unified generalization error analysis of large compressible deep neural network

Papertalk - the platform for scientific paper presentations

Iclr2020: Compression based bound for non-compressed network

ICLR 2020

ICLR 2020

Iclr2020: Compression based bound for non-compressed network

YK (@yhkwkm) / X