Estimating the size of Spark Cluster

4.7 (343) In stock

4.7 (343) In stock

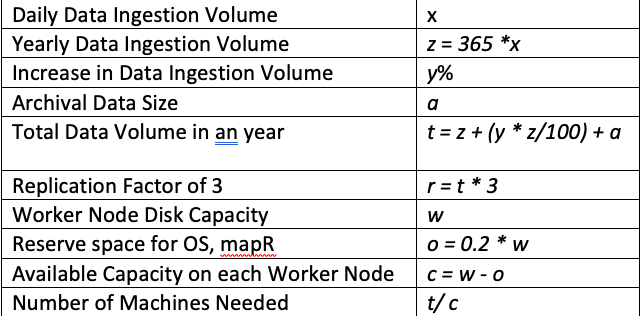

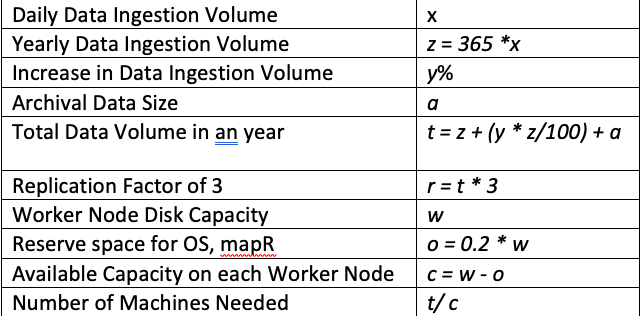

What should be the number of worker nodes in your cluster? What should be the configuration of each worker node? All this depends on the amount of data you would be processing. In this post I will…

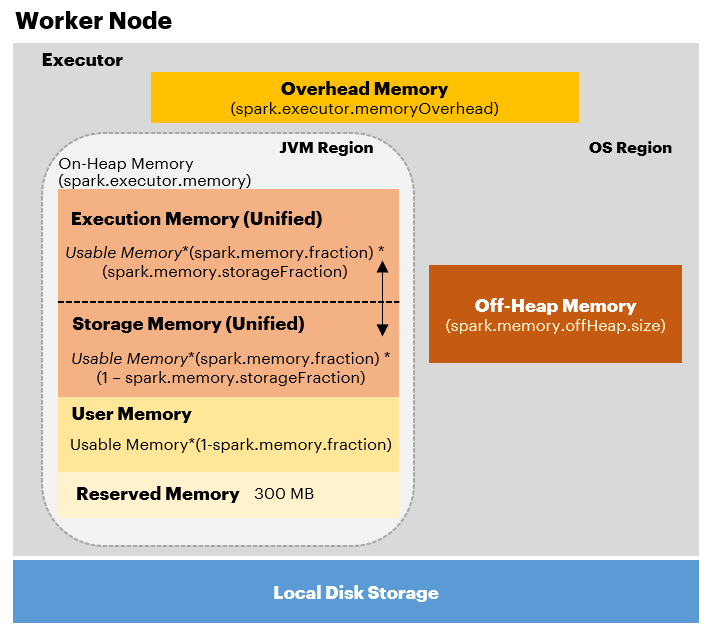

Explain Resource Allocation configurations for Spark application

Breaking the bank on Azure: what Apache Spark tool is the most cost-effective?, Intercept

How Duolingo Reduced Their EMR job Cost by 55% Using Our Gradient Solution

Electronics, Free Full-Text

Leveraging resource management for efficient performance of Apache Spark, Journal of Big Data

sparklyr - Using sparklyr with an Apache Spark cluster

How to improve FRTB's Internal Model Approach implementation using Apache Spark and EMR

Spark Performance Tuning: Spill. What happens when data is overload your…, by Wasurat Soontronchai

How Adobe Does 2 Million Records Per Second Using Apache Spark!