AI and the paperclip problem

4.8 (451) In stock

4.8 (451) In stock

Philosophers have speculated that an AI tasked with a task such as creating paperclips might cause an apocalypse by learning to divert ever-increasing resources to the task, and then learning how to resist our attempts to turn it off. But this column argues that, to do this, the paperclip-making AI would need to create another AI that could acquire power both over humans and over itself, and so it would self-regulate to prevent this outcome. Humans who create AIs with the goal of acquiring power may be a greater existential threat.

How An AI Asked To Produce Paperclips Could End Up Wiping Out

What Is the Paperclip Maximizer Problem and How Does It Relate to AI?

What Is The 'Waluigi Effect,' 'Roko's Basilisk,' 'Paperclip Maximizer' And 'Shoggoth'? The Meaning Behind These Trending AI Meme Terms Explained

Kill or cure? The challenges regulators are facing with AI

What is the paper clip problem? - Quora

Jake Verry on LinkedIn: As part of my journey to learn more about

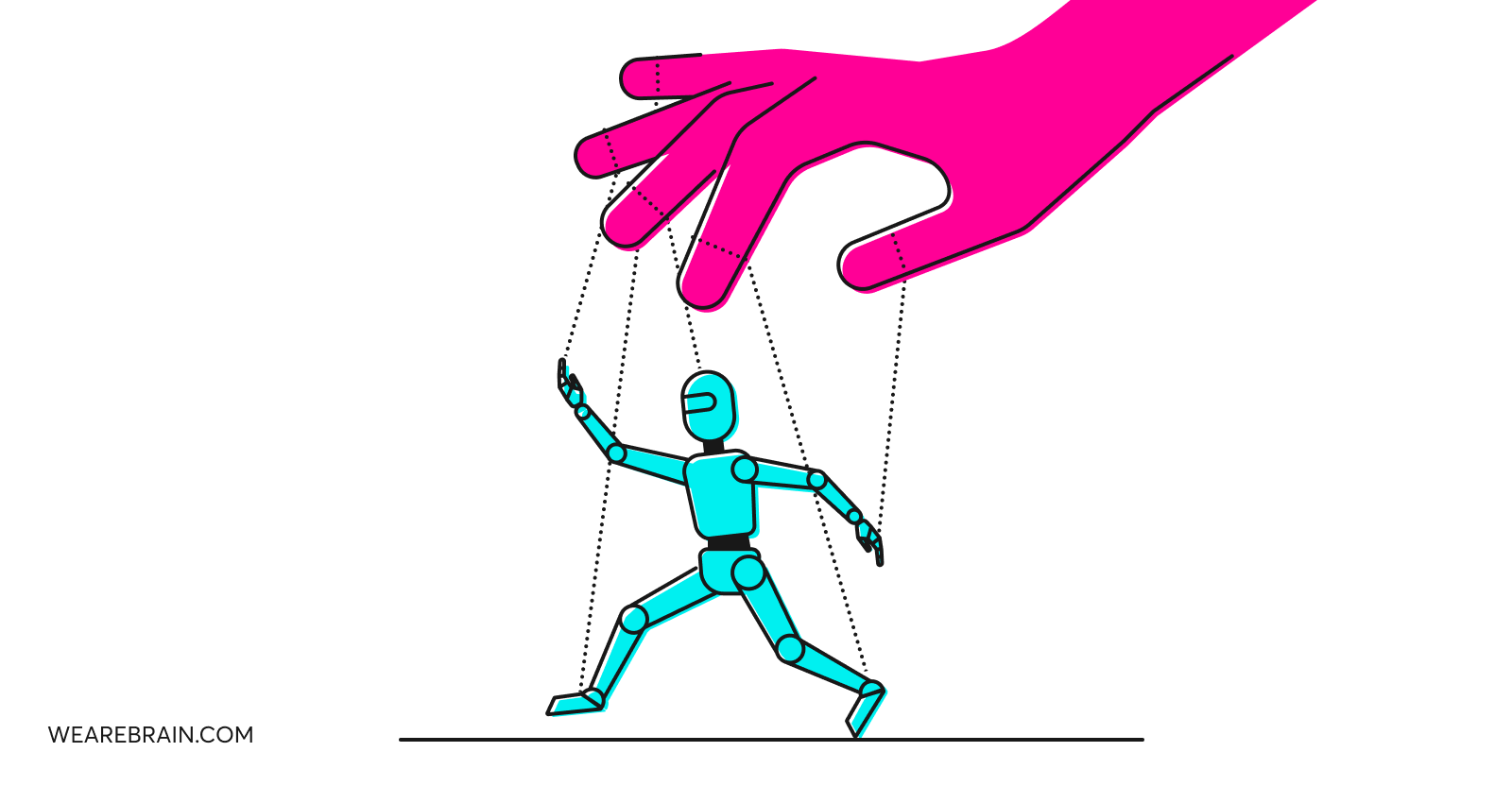

The AI Control Problem (and why you should know about it) - WeAreBrain

Artificial General Intelligence: can we avoid the ultimate existential threat?

What is the paper clip problem? - Quora

Capitalism is a Paperclip Maximizer